Self hosting this blog

I spent a few hours this weekend collecting together the remnants of past blogs - dating back 20 years - and migrated them to a fresh Hugo blog. This very one, in fact! It’s hosted on a VPS and I don’t want to spend too much time setting it up and maintaining it.

Pre-requisites

Just a couple of things to do before setting up the server itself: DNS and a service email.

You’ll want to point your DNS at the IP address your server is going to be reachable at. This is necessary as the cloud-init script I used will automatically get an SSL certificate for the domain in question. If the DNS can’t be resolved, no certificate can be issued.

All you need is an A record, and possibly a CNAME.

; A record

j23n.com. 300 IN A 95.217.180.197

; CNAME record

www.j23n.com. 300 IN CNAME j23n.com.

After banging my head against the wall for a bit, I decided to use a separate email account for my sysadmin messages. This way, I just send emails to myself, using my mail provider. No fiddling with the SMTP relay or worrying about emails getting lost this way. Not a huge attack vector as the email account only has access to the sysadmin emails.

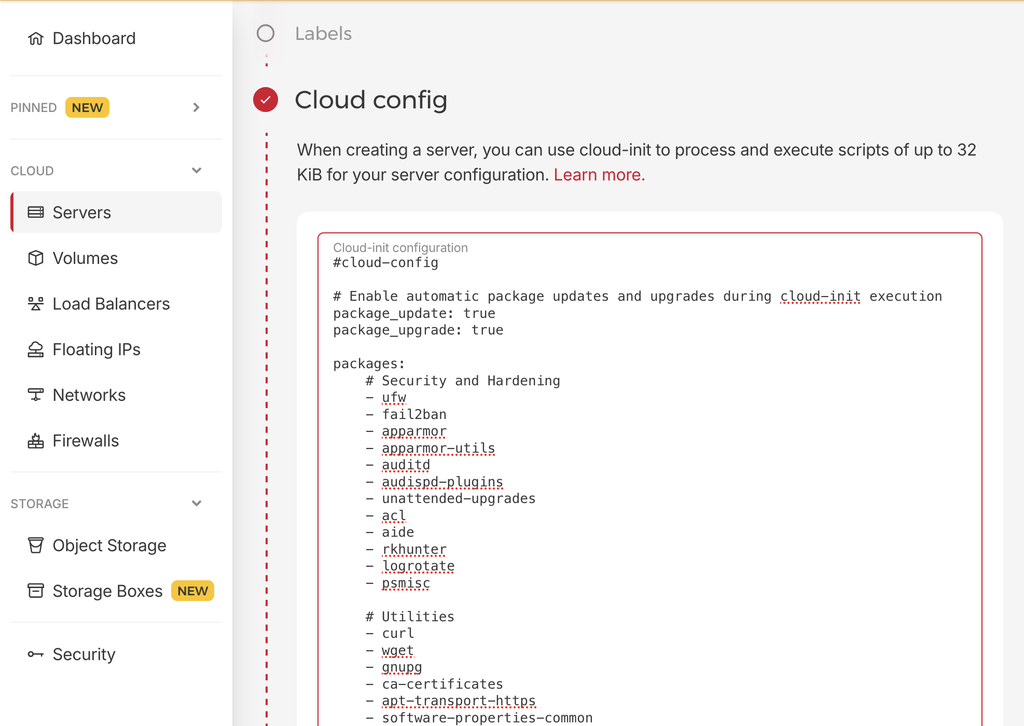

Server setup with cloud-init

Hetzner has a neat feature: specifying a cloud-init file during the VPS setup! This will set up the machine just how you want.

I found a great basic config on HackerNews a while ago but now can’t find it anymore. The one I wrote is based on it, thank you forgotten hero!

This was the first time I used cloud-init, and I was quite the fan! The script is run right when the server first boots. Couple of pointers:

- log into your VPS and check the status with

cloud-init status - in case something goes wrong, detailed logs can be found at

/var/log/cloud-init-output.log

Let’s step over what the script does.

Included software

First, I declares all kinds of packages to be installed. These are mostly there to harden the server against intrusions. unattened-upgrades keeps the system up to date, fail2ban blocks most unwanted login attempts or http probing.

[...]

packages:

# Security and Hardening

- ufw

- fail2ban

- apparmor

- auditd

- unattended-upgrades

- acl

# Utilities

[...]

# Server

- nginx

- certbot

A sudo user

I’m setting up a deploy user, which I will be using for most of the interactions with the server.

General config

Then follows a series of scripts.

/etc/ssh/sshd_config. No root login, no password auth, only use keys. Pretty standard./etc/sysctl.d/99-security.conf. A bunch of kernel parameters for hardening things. I’m taking these on good-faith./etc/fail2ban/jail.local. Jails for those that trigger any rate limiting fornginxandssh./etc/apt/apt.conf.d/50unattended-upgrades. The name speaks for itself./etc/nginx/sites-available/<your-domain>. nginx configuration for HTTP with various security headers set. Runningcertbotlater will rewrite this file to redirect HTTP requests to HTTPS, keeping the rest of the config for both HTTP/HTTPS.

Monitoring

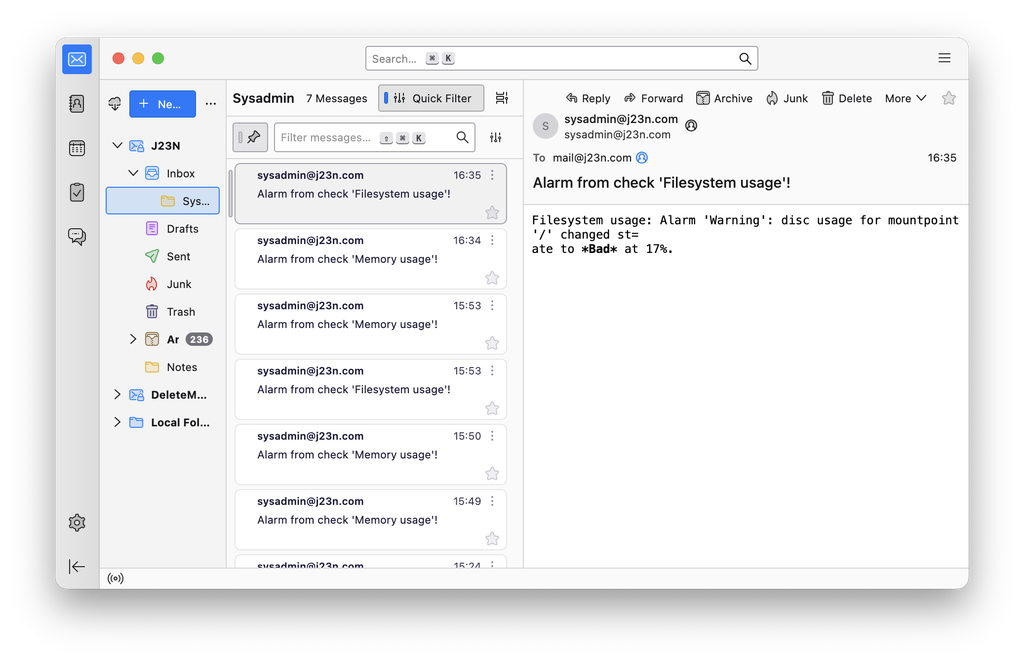

I wanted to add some simple, low-key monitoring to this setup. No grafana, no prometheus. Just an email digest once a week or an alert if something is seriously broken.

At first, I cobbled together a few bash scripts with the help of Claude Code (nifty, but there are errors!) that are deployed to the server as part of cloud-init. Frankly, this seemed neither robust nor comprehensive so I again looked for alternatives and found minmon, which seems to do exactly what I am looking for.

I’m using just a few basic checks , paired with alarms

# Minmon config with the following checks

# - systemd service

# - filesystem usage

# - memory usage

# - load pressure for CPU/IO/Memory

## Service state

[[checks]]

interval = 60

name = "Services"

type = "SystemdUnitStatus"

units = [

"auditd.service",

"chrony.service",

"fail2ban.service",

"nginx.service",

"ssh.service",

"unattended-upgrades.service"

]

[[checks.alarms]]

name = "Warning"

action = "Email"

[...]

## Memory usage

[[checks]]

interval = 60

name = "Memory usage"

type = "MemoryUsage"

memory = true

[[checks.alarms]]

name = "Warning"

action = "Email"

[...]

## Disk usage

[[checks]]

interval = 60

name = "Filesystem usage"

type = "FilesystemUsage"

mountpoints = ["/"]

placeholders = {"check_description" = "disc usage for mountpoint '/'"}

[[checks.alarms]]

name = "Warning"

level = 75

action = "Email"

[...]

## Pressure average

[[checks]]

interval = 60

name = "Pressure averages"

type = "PressureAverage"

cpu = true

io = "Both"

memory = "Both"

avg60 = true

[[checks.alarms]]

name = "Warning"

action = "Email"

[...]

All of these alarms map to just a single Email action (also called Email 🙈), which sends me a email containing a one liner about what alarm has been triggered. Beautifully simple and it even runs as a systemd service.

# Actions

# all checks map to the same single action. This action will send an email

# to the administrator

[[actions]]

name = "Email"

type = "Email"

from = "${MINMON_EMAIL_FROM}"

to = "${ADMIN_EMAIL}"

subject = "Alarm from check '{{check_name}}'!"

body = "{{check_name}}: Alarm '{{alarm_name}}': {{check_description}} changed state to *{{alarm_state}}* at {{level}}."

smtp_server = "${SMTP_HOST}"

username = "${SMTP_USERNAME}"

password = "${SMTP_PASSWORD}"

Trying this out using stress and setting artificially low level thresholds in the alarms and the emails started to stream in.

Simple, elegant and flexible. It’s also possible to parse the output of various processes (e.g. to check the status of jail2ban) using the ProcessOutputMatch. But I figured that this is enough. I’m not hosting anything mission critical after all.

Provisioning

When creating a new server, I just copy and paste my cloud-init.yaml into the text field. This really couldn’t be easier. Thank you Hetzner!

(luckily my script is currently only 25KB in size. Aha!)

The server will be fully configured in a couple of minutes.

Deployment

Copying the static files of my block couldn’t be easier. I have a little deploy.sh script in my repository that does the heavy lifting. The only interesting thing here is that I use staticrypt.

#! /bin/bash

DEPLOYMENT_USER=<me>

DEPLOYMENT_HOST=j23n.com

DEPLOYMENT_PATH=/srv/www/j23n.com/

set -e -x

# build website into public/

hugo

# encrypt private blog posts in public/private

staticrypt public/private/* -r -d public/private \

--template-color-secondary "#FFFFFF" \

--template-color-primary "#2bbc8a" \

--template-instructions "It's nice to not share everything with everyone 🥷. If you know me, just reach out and I'll send you a link or the password 🥇"

# rename the feed file to something based on the salt for staticrypt. It's just supposed to be random

SALT=$(< .staticrypt.json)

source .env

randomurl="${SALT}${STATICRYPT_PASSWORD}"

mv public/private/index.xml public/private/${randomurl}.xml

# make deployment directory

ssh -t ${DEPLOYMENT_USER}@${DEPLOYMENT_HOST} "mkdir -p ${DEPLOYMENT_PATH}"

# sync the files

rsync -avz --progress public/ ${DEPLOYMENT_USER}@${DEPLOYMENT_HOST}:${DEPLOYMENT_PATH} --delete

And that’s how I rollout an update. Easy!

More monitoring?

Yes, there’s more! I use tinylytics.app for uptime monitoring and rudimentary view counts. If the site goes down, I’ll get an email.